By Glynn Wilson –

Tales From the MoJo Road –

SAN FRANCISCO, Calif. – We were somewhere around Sunnyvale headed north at high speed on 101 in the Forest Green Gwyneth Ford, a media camper van, when the sun finally burned through the fog, just in time to reveal the view of South Bay. The sativa gummy was kicking in as I ejected the cassette tape and hit search on the radio dial. It stopped just as the London Bach Choir began singing, “You can’t always get what you want.”

Turn it up.

Then the Rolling Stones came in, adding, “But if you try sometimes, you just might get what you need.”

Story of my life.

You Can’t Always Get What You Want: The A.I. Bubble Will Burst

As promised in the original “Good Morning Coulterville! Premiere Broadcast” on Yosemite Radio, we are now going to talk about the evolutionary science on altruism vs. the selfish gene. In this place, where the movement toward “effective altruism” has taken root – alongside its evil twin brother, “selfish narcissism.”

When the winner in this most fundamental of human struggles is exposed, we will know whether there is a future for some semblance of an “American Dream” of democracy – or whether the jig was up a long time ago and the end was inevitable all along, like Hunter Thompson tried to warn us. Like the Roman Empire, the Spanish Empire and all the other empires that lasted about 250 years. A milestone the United States hits in 2026.

R-Evolution, a 45-foot-tall illuminated sculpture by renowned artist Marco Cochrane, will be on view in Embarcadero Plaza from April 2025 through 2026. Originally unveiled at Burning Man, this monumental artwork embodies strength, resilience, and the power of representation. By Glynn Wilson

If Nixon was not bad enough to crush the whole thing and bring it crumbling to its knees, along with a couple of Bushes, the Golden Flake Trump and his buddies Elon Musk and Mark Zuckerberg are giving it a go. All in the name of trying to make America “great again” and the world “connected,” as Zuckerberg said after vowing to “move fast and break things.”

Massive amounts of research shows that all social media did was make us feel less connected and more divided than ever.

The Social Media Paradox: More Friends, Less Connection

How tech platforms fuel U.S. political polarization

It did break things, including Public Understanding of Science and American democracy. Now Zuck wants to “bring personal super (A.I.) to everyone,” whether they like it or want it or not? No thanks on the glasses, dude.

Zuckerberg’s Vision for ‘Personal Superintelligence’

Nobody around here ever thought America was anything but great all along, and that would include Steve Jobs, Steve Wozniak and Tim Cook from Baldwin County Alabama, now the head of Apple.

Apple CEO Tim Cook From South Alabama Stands Up to FBI

What are these misfits and college dropouts who now want to control every aspect of our lives talking about? If they had ever bothered to attend a class or read a book on the science of evolution, philosophy, history, some great American literature, or even business ethics, they might have done great things for America and the planet. Instead they have us all hurtling toward a deep dark abyss at the end of all things, like the “nothingness” in the “Never Ending Story” of Fantasia.

STOP!

How many billions and trillions do you need to be happy, men? Will it ever be enough?

Please step down, retire and give away all your money like Bill Gates and Warren Buffett. Go play on your secret underground bunker on the black lava sand beach in Hawaii. You’ve done enough damage.

As I was looking out on this great city with a view and thinking about how to go about communicating what the problems are and how to solve them, the muse took me back to the basic issue I’ve been writing about for years. How to counter the selfish gene – now pumped up on steroids – by flooding the world with altruism.

If you do a search of the New American Journal for “altruism,” you will see 53 stories, most notably this:

Can Altruism Trump Selfishness to Save Democracy and Planet Earth?

But as I was doing more research and thinking about where to go next with this problem, I ran across a group that has dedicated itself to promoting “Effective Altruism.”

One of the creators of this movement is Scottish philosopher Will MacAskill, no college dropout. He was a Research Fellow at the Global Priorities Institute at the University of Oxford and co-founded a philanthropic group called Giving What We Can and published a book in 2015 called “Doing Good Better.” He taught at the university level for 10 years, like me. In 2020 he was co-author of “Moral Uncertainty” and in 2022 published “What We Owe the Future.”

He began to get famous with TED talks on YouTube, like this one on Oct 3, 2018.

What are the most important moral problems of our time?

Of all the problems facing humanity, which should we focus on solving first? In a compelling talk about how to make the world better, moral philosopher Will MacAskill provides a framework for answering this question based on the philosophy of “effective altruism” — and shares ideas for taking on three pressing global issues.

At a conference in San Francisco that same year, he did another one on “Why should effective altruists embrace uncertainty?”

Probabilistic thinking is only a few centuries old, we have very little understanding on how most of our actions affect the long-term future, and prominent members of the effective altruism community have changed their minds on crucial considerations before. These are just three of the reasons that Will MacAskill urges effective altruists to embrace uncertainty, and not become too attached to present views. This talk was the closing talk for EA Global: San Francisco 2018.

He’s also appeared on a few television talk shows, including the Daily Show.

The group, based in the UK, has a website where the mission and goals are explained, beginning with this essay.

Effective altruism is a project that aims to find the best ways to help others, and put them into practice.

It’s both a research field, which aims to identify the world’s most pressing problems and the best solutions to them, and a practical community that aims to use those findings to do good.

This project matters because, while many attempts to do good fail, some are enormously effective. For instance, some charities help 100 or even 1,000 times as many people as others, when given the same amount of resources.

This means that by thinking carefully about the best ways to help, we can do far more to tackle the world’s biggest problems.

Effective altruism was formalized by scholars at Oxford University, but has now spread around the world, and is being applied by tens of thousands of people in more than 70 countries.

People inspired by effective altruism have worked on projects that range from funding the distribution of 200 million malaria nets, to academic research on the future of A.I., to campaigning for policies to prevent the next pandemic.

They’re not united by any particular solution to the world’s problems, but by a way of thinking. They try to find unusually good ways of helping, so that a given amount of effort goes an unusually long way.

They’ve identified some of the biggest problems to tackle, including nuclear proliferation, biohazards that could cause the next pandemic, providing medical supplies in poor countries and advocating for an end to factory farming. But the one I want to focus on is what to do about the growing threats from Artificial Intelligence.

What this group wants to do is help create a field of A.I. alignment research.

“Artificial intelligence (AI) is progressing rapidly. The leading A.I. systems are now able to engage in limited conversation, solve college-level maths problems, explain jokes, generate extremely realistic images from text, and do basic coding. None of this was possible just ten years ago. The ultimate goal of the leading A.I. labs is to develop A.I. that is as good as, or better than, human beings on all tasks.

“It’s extremely hard to predict the future of technology, but various arguments and expert surveys suggest that this achievement is more likely than not this century. And according to standard economic models, once general A.I. can perform at human level, technological progress could dramatically accelerate. The result would be an enormous transformation, perhaps of a significance similar to or greater than the industrial revolution in the 1800s.

“If handled well,” they say, “this transformation could bring about abundance and prosperity for everyone. If handled poorly, it could result in an extreme concentration of power in the hands of a tiny elite.”

That seems to be the trend.

“In the worst case, we could lose control of the A.I.systems themselves. Unable to govern beings with capabilities far greater than our own, we would find ourselves with as little control over our future as chimpanzees have control over theirs.

“This means this issue could not only have a dramatic impact on the present generation, but also on all future generations.”

This makes it especially pressing from a “longtermist” perspective, a school of thinking which holds that improving the long-term future is a key moral priority of our time.

“How to ensure A.I. systems continue to further human values, even as they become equal (or superior) to humans in their capabilities, is called the A.I. alignment problem, and solving it requires advances in computer science. Despite its potentially historical importance, only a couple of hundred researchers work on this problem, compared to tens of thousands working to make A.I. systems more powerful.”

“One priority is to simply tell more people about the issue,” they say, so we are doing that right here.

The book “Superintelligence” was published in 2014, making the case for the importance of A.I. alignment. It became a New York Times best-seller.

Another priority is to build a research field focused on this problem.

A.I. pioneer Stuart Russell, and others inspired by effective altruism, founded The Center for Human-Compatible A.I. at University of California Berkeley. This research institute aims to develop a new paradigm of A.I. development, “in which the act of furthering human values is central.”

Others have helped to start teams focused on A.I. alignment at major A.I. labs such as DeepMind and OpenAI, and outline research agendas for A.I. alignment, in works such as Concrete Problems in A.I. Safety.

Broken Media

We are now engaged in this issue, and as we have been saying all along, a big part of the problem is our broken news media and social media communications system, and our political system, which must be fixed before any of these other problems can be solved for the long term. No one else seems to be asking the right questions.

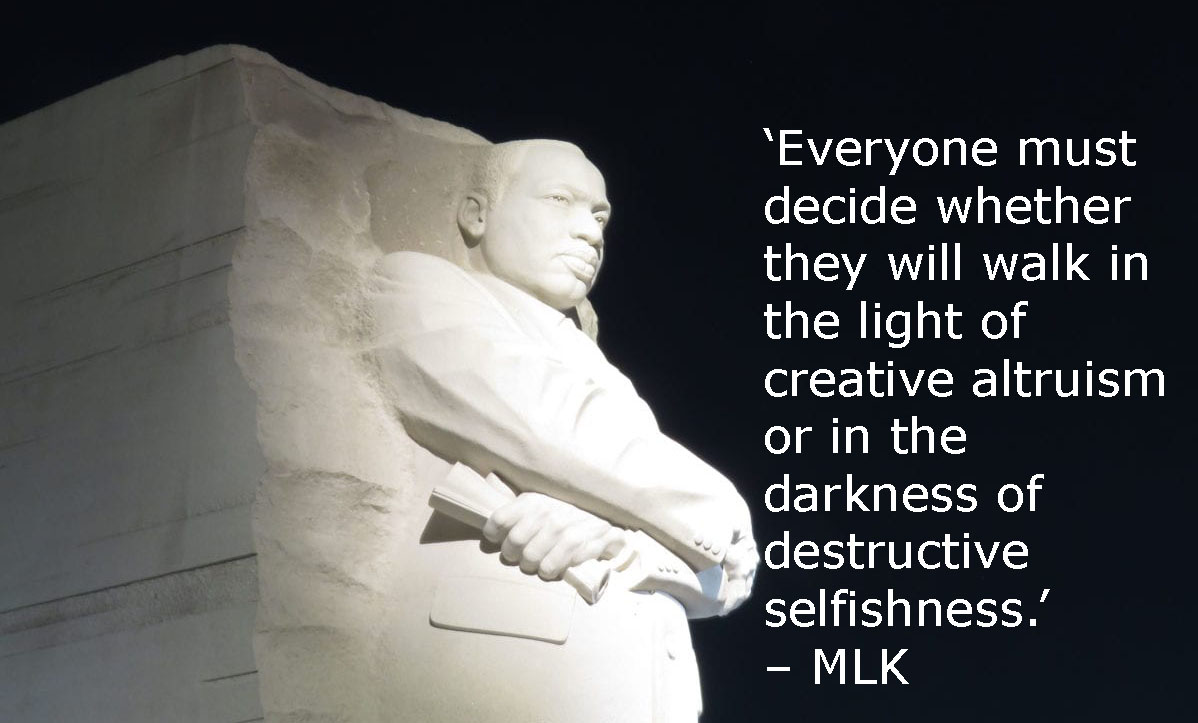

Even Martin Luther King Jr., a smart, well read man in his time, knew that altruism was the answer.

We need to create the media and social media systems where at least the intellectual and tech savvy elites can come to find out what is really going on, without all the noise and wasted bandwidth on narcissistic selfies and cat pictures. People need an algorithm for prioritized news and information of utmost importance, and a tool that actually works for activism. We’ve seen the social media apps built on an algorithm designed for maximum nonsense and profit among the lowest common denominator members of the general public. So far this has just confused the masses. They need opinion leaders, influential members, just like the early days of propaganda and public opinion research showed.

Even as media criticism led to Facebook, Instagram, Twitter, etc. purposely downplaying news and external links to factual information, public confusion only increased. Look at any Facebook group on politics or the environment, for example, and you will still see people trying to share news links. In fact, that’s mostly what they do on the platforms. To diminishing effect.

We would take the opposite approach, encouraging members to help share solid, factual information with the public. And highlight that in the algorithm, rather than downplaying it. We would work with legitimate news organizations, not hide their work. This is crazy. What kind of drugs are these hacker/programmers high on? Speed? Meth? Ketamine?

A Question For the Bots: Can A.I. Be Designed and Trained to Be Used for Good?

An Initial A.I. Response to the Question: How to Build a Better System to Protect Democracy?

Can Altruism Trump Selfishness to Save Democracy and Planet Earth?

Part III: How to Create a Functioning Communications System to Save Democracy and the Planet

Related

AI showing signs of self-preservation and humans should be ready to pull plug, says pioneer

The office block where AI ‘doomers’ gather to predict the apocalypse

If you support truth in reporting with no paywall, and fearless writing with no popup ads or sponsored content, consider making a contribution today with GoFundMe or Patreon or PayPal.

The “Nothing” clip should be listened to as it relates great truth….